No products in the cart.

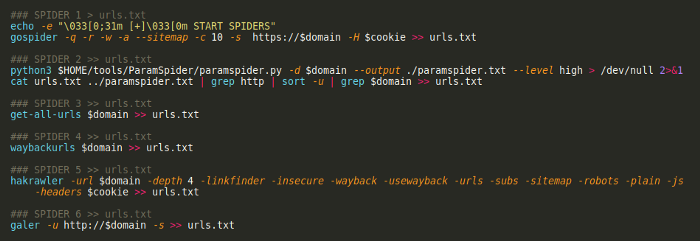

Automation of the reconnaissance phase during Web Application Penetration Testing II by Karol Mazurek This article is a continuation of the previous one available in this link. After the first phase of reconnaissance, which was subdomains enumeration, you should have a lot of information about the company you are attacking. The....

Author

Latest Articles

BlogOctober 31, 2023Installing Autopsy 4.6.0 on linux | by Christian Kisutsa

BlogOctober 31, 2023Installing Autopsy 4.6.0 on linux | by Christian Kisutsa BlogAugust 29, 2022Disk-Arbitrator | by Aaron Burghardt

BlogAugust 29, 2022Disk-Arbitrator | by Aaron Burghardt BlogAugust 22, 2022code-forensics | by Silvio Montanar

BlogAugust 22, 2022code-forensics | by Silvio Montanar BlogAugust 15, 2022Sherloq | by Guido Bartoli

BlogAugust 15, 2022Sherloq | by Guido Bartoli

Subscribe

Login

0 Comments